221. The k-nearest neighbors algorithm

Learn k-NN by using it in classification, regression, and interpolation case studies

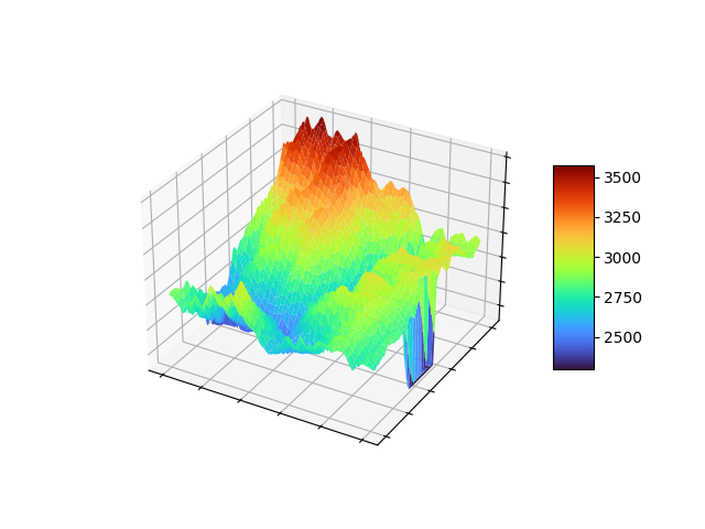

k-nearest neighbors is one of the most versatile and robust machine learning algorithms there is. In this course we'll walk through its implementation in Python (just a few lines of code), and how to use it to perform classification, regression, and interpolation. We'll apply it to several different types of data sets, too, and use it to identify penguins, price diamonds, and interpolate spatial data, like we would need to do if mapping ocean temperatures with automated drones.

At this point, the course is still a work in progress, but it's open for registration. If you'd like to preview the material, the first portion of the course is freely available in the lesson list below. Prerequisites are the basics of Python -- like for loops, functions and f-strings -- or at least the willingness to learn them. We'll walk through all the code line by line, so you should be able to fill in any gaps you have as we go.

And if you're interested in the other End to End Machine Learning course offerings, keep in mind that you can get access to the entire course catalog for just $9 USD per month or $99 USD for all time.

Course Curriculum

-

PreviewPenguins Intro (4:35)

-

PreviewPenguins 0. Read in the penguins data file (3:42)

-

PreviewPenguins 1. Break out each line into its fields (3:28)

-

PreviewPenguins 2. Convert numerical fields to floats (3:41)

-

PreviewPenguins 3. Handle NAs in the numerical fields (2:40)

-

PreviewPenguins 4. Convert sex field to a number (3:05)

-

PreviewPenguins 5. Handle NAs in the sex field (2:26)

-

PreviewPenguins 6. Convert penguin species to a numerical code (1:49)

-

PreviewPenguins 7. Populate feature and label arrays (7:43)

-

StartPenguins 8. Divide the penguins up into training and testing groups

-

PreviewPenguins 9. Randomly sort penguins into training and testing groups (5:24)

-

PreviewHow k-nearest neighbors works (26:19)

-

PreviewPenguins 10. Find the nearest neighbors (6:42)

-

PreviewPenguins 11. Find the predicted species and tally the score (5:55)

-

PreviewPenguins 12. Account for ties (3:41)

-

PreviewPenguins 13. Normalize features (7:01)

-

PreviewPenguins 14. Monte Carlo cross validation (5:57)

-

PreviewPenguins 15. Adjust train/test split (3:31)

Frequently Asked Questions

Get started now!

Your Instructor

I love solving puzzles and building things. Machine learning lets me do both. I got started by studying robotics and human rehabilitation at MIT (MS '99, PhD '02), moved on to machine vision and machine learning at Sandia National Laboratories, then to predictive modeling of agriculture DuPont Pioneer, and cloud data science at Microsoft. At Facebook I worked to get internet and electrical power to those in the world who don't have it, using deep learning and satellite imagery and to do a better job identifying topics reliably in unstructured text. Now at iRobot I work to help robots get better and better at doing their jobs. In my spare time I like to rock climb, write robot learning algorithms, and go on walks with my wife and our dog, Reign of Terror.